Current publications and results of the consortium and the associated members of the Research Hub Neuroethics in the field of neuroethics are listed here.

Purpose

This study aims to demonstrate the importance of recognizing stress in the workplace. Accurate novel objective methods that use electroencephalogram (EEG) to measure brainwaves can promote employee well-being. However, using these devices can be positive and potentially harmful as manipulative practices undermine autonomy.

Design/methodology/approach

Emphasis is placed on business ethics as it relates to the ethics of action in terms of positive and negative responsibility, autonomous decision-making and self-determined work through a literature review. The concept of relational autonomy provides an orientation toward heteronomous employment relationships.

Findings

First, using digital devices to recognize stress and promote health can be a positive outcome, expanding the definition of digital well-being as opposed to dependency, non-use or reduction. Second, the transfer of socio-relational autonomy, according to Oshana, enables criteria for self-determined work in heteronomous employment relationships. Finally, the deployment and use of such EEG-based devices for stress detection can lead to coercion and manipulation, not only in interpersonal relationships, but also directly and more subtly through the technology itself, interfering with self-determined work.

Originality/value

Stress at work and EEG-based devices measuring stress have been discussed in numerous articles. This paper is one of the first to explore ethical considerations using these brain–computer interfaces from an employee perspective.

This book offers a comprehensive review of current topics in decision making. It covers new findings relating to foundations, mechanisms, and consequences of decisions, shedding light on the cognitive processes from different disciplinary perspectives. Chapters report on psychological studies of the cognitive mechanisms of decision making, neuroimaging studies on the neural correlates, studies of patient populations to characterize alterations in decision making in specific diseases, as well as discussions concerning philosophical and ethical issues.

In recent debates on digital twins, much attention has been paid to understanding the interaction between individuals and their digital representations (Braun, Citation2021). Iglesias et al. (Citation2025) shed new light on this debate, extending the reflection on digital doppelgängers—digital twins that try to replicate the psychological dimension of an individual. They argue that such copies may serve as valuable means to achieve legacy and relational aims left unaddressed due to the person’s death. Against this background, we discuss how far we may better understand the implied normative aspects by considering them in terms of the represented person’s death. Specifically, we ask how we can and should, in normative terms, deal with a digital twin as a representation of a person after their death.

Here, we consider the decommissioning of such technology. We define decommissioning as the withdrawal, dismantling, or rendering the doppelgänger incapable of serving its original aims. We hypothesize that the way in which these digital doppelgängers ought to be decommissioned may depend upon whether they are viewed either as a proxy or as an extension of personhood. By proxy, we mean a stand-in for an individual by replicating their decisions and style without embodying their personal identity or subjective experience; something that makes decisions on your behalf but is not you (Braun and Krutzinna Citation2022). What is left behind is akin to an artifact owned by you. Whereas an extension of personhood can mean extending aspects of an individual’s identity and relational presence beyond death by reflecting their values, projects, and relationships; something that is/was a part of yourself. What is left behind is akin to an “informational corpse” (Öhman and Floridi Citation2018).

Answering this decommissioning question is necessary not only to respect the intended aims of those for whom the digital doppelgängers were created, but also to potentially respect certain social norms surrounding obsequies. Viewing digital doppelgängers either as proxies or extensions of personhood implies respective normative notions. For instance, the pursuit of any decommissioning strategy will require necessary and sufficient standards of informed consent, which may be difficult to parse given that not all individuals will view their digital doppelgänger in the same manner. The decommissioning of digital doppelgängers is thus enriched by moral nuances influenced by the perceptions we may have of this technology.

Optogenetics has potentials for a treatment of retinitis pigmentosa and other rare degenerative retinal diseases. The technology allows controlling cell activity through combining genetic engineering and optical stimulation with light. First clinical studies are already being conducted, whereby the vision of participating patients who were blinded by retinitis pigmentosa was partially recovered. In view of the ongoing translational process, this paper examines regulatory aspects of preclinical and clinical research as well as a therapeutic application of optogenetics in ophthalmology. There is no prohibition or specific regulation of optogenetic methods in the European Union. Regarding preclinical research, legal issues related to animal research and stem cell research have importance. In clinical research and therapeutic applications, aspects of subjects' and patients' autonomy are relevant. Because at EU level, so far, no specific regulation exists for clinical studies in which a medicinal product and a medical device are evaluated simultaneously (combined studies) the requirements for clinical trials with medicinal products as well as those for clinical investigations on medical devices apply. This raises unresolved legal issues and is the case for optogenetic clinical studies, when for the gene transfer a viral vector classified as gene therapy medicinal product (GTMP) and for the light stimulation a device qualified as medical device are tested simultaneously. Medicinal products for optogenetic therapies of retinitis pigmentosa fulfill requirements for designation as orphan medicinal product, which goes along with regulatory and financial incentives. However, equivalent regulation does not exist for medical devices for rare diseases.

Virtual reality (VR) induces a radical psychological reorientation. Yet descriptions of this reorientation are often steeped in theoretically misleading metaphors. We offer a more measured account, grounded in both philosophy and cognitive psychology, and use it to access the claim that VR promotes moral learning by simulating another's perspective. This hypothesis depends on the assumption that avatar use produces experiences sufficiently similar to those of others to enable empathic growth. We reject that assumption and offer two arguments against it. Empathy relevant to moral learning requires interpretive effort and contextual understanding, not just a shift in perspective. And VR's open-ended, user-driven structure tends to reinforce prior assumptions rather than unsettle them. Still, avatar use may have a different effect on moral learning, which we call self-fragmentation. By loosening the boundaries of the self, VR may expand the range of people one is disposed to empathize with.

This volume focuses on the ethical dimensions of the technological framework in which human thought and action is embedded and which has been brought into the focus of cognitive science by theories of situated cognition. There is a broad spectrum of technologies that co-actualize or enable and reinforce human cognition and action and that differ in the degree of physical integration, interactivity, adaptation processes, dependency and indispensability, etc. This technological framework of human cognition and action is evolving rapidly. Some changes are continuous, others are eruptive. Technologies that use machine learning, for example, could represent a qualitative leap in the technological framework of human cognition and action. The ethical consequences of applying theories of situated cognition to practical cases have not yet received adequate attention and are explored in this volume.

Machine learning (ML) has significantly enhanced the abilities of robots, enabling them to perform a wide range of tasks in human environments and adapt to our uncertain real world. Recent works in various ML domains have highlighted the importance of accounting for fairness to ensure that these algorithms do not reproduce human biases and consequently lead to discriminatory outcomes. With robot learning systems increasingly performing more and more tasks in our everyday lives, it is crucial to understand the influence of such biases to prevent unintended behavior toward certain groups of people. In this work, we present the first survey on fairness in robot learning from an interdisciplinary perspective spanning technical, ethical, and legal challenges. We propose a taxonomy for sources of bias and the resulting types of discrimination due to them. Using examples from different robot learning domains, we examine scenarios of unfair outcomes and strategies to mitigate them. We present early advances in the field by covering different fairness definitions, ethical and legal considerations, and methods for fair robot learning. With this work, we aim to pave the road for groundbreaking developments in fair robot learning.

With regard to the dual-use problem, digitalisation in the life sciences has two distinct influences, namely an exacerbating and an expanding one. By enabling faster and more extensive research and development processes, digitalisation exacerbates the existing dual-use problem because it also increases the speed at which the results of this research can be used for security-relevant purposes. In addition, the digitalisation of the life sciences extends the dual-use problem, as some of the digital tools that are developed and used in the life sciences can themselves be used for military or security-related purposes.

Broad-based governance is therefore required, including broad stakeholder participation in the research process and the provision of information on dual use in education in good scientific practice across institutions, career stages and disciplines.

Phenomenological interview methods (PIMs) have become important tools for investigating subjective, first-person accounts of the novel experiences of people using neurotechnologies. Through the deep exploration of personal experience, PIMs help reveal both the structures shared between and notable differences across experiences. However, phenomenological methods vary on what aspects of experience they aim to capture and what they may overlook. Much discussion of phenomenological methods has remained within the philosophical and broader bioethical literature. Here, we begin with a conceptual primer and preliminary guide for using phenomenological methods to investigate the experiences of neural device users.

To improve and expand the methodology of phenomenological interviewing, especially in the context of the experience of neural device users, we first briefly survey three different PIMs, to demonstrate their features and shortcomings. Then we argue for a critical phenomenology—rejecting the ‘neutral’ phenomenological subject—that encompasses temporal and ecological aspects of the subjects involved, including interviewee and interviewer (e.g. age, gender, social situation, bodily constitution, language skills, potential cognitive disease-related impairments, traumatic memories) as well as their relationality to ensure embedded and situated interviewing. In our view, PIMs need to be based on a conception of experience that includes and emphasizes the relational and situated, as well as the anthropological, political and normative dimensions of embodied cognition.

We draw from critical phenomenology and trauma-informed qualitative work to argue for an ethically sensitive interviewing process from an applied phenomenological perspective. Drawing on these approaches to refine PIMs, researchers will be able to proceed more sensitively in exploring the interviewee’s relationship with their neuroprosthetic and will consider the relationship between interviewer and interviewee on both interpersonal and social levels.

This paper explores the potential of community-led participatory research for value orientation in the development of medical AI systems. First, conceptual aspects of participation and sharing, the current paradigms of AI development in medicine and the new challenges of AI technologies are examined. The paper proposes a shift towards a more participatory and community-led approach to AI development, illustrated by various participatory research methods in the social sciences and design thinking. It discusses the prevailing paradigms of ethics by design and embedded ethics in value customization of medical technologies and acknowledges their limitations and criticisms. It then presents a model for community-led development of AI in medicine that emphasizes the importance of involving communities at all stages of the research process. The paper argues for a shift towards a more participatory and community-led approach to AI development in medicine, which promises more effective and ethical medical AI systems.

Recent work on ecological accounts of moral responsibility and agency have argued for the importance of social environments for moral reasons responsiveness. Moral audiences can scaffold individual agents’ sensitivity to moral reasons and their motivation to act on them, but they can also undermine it. In this paper, we look at two case studies of ‘scaffolding bad’, where moral agency is undermined by social environments: street gangs and online incel communities. In discussing these case studies, we draw both on recent situated cognition literature and on scaffolded responsibility theory. We show that the way individuals are embedded into a specific social environment changes the moral considerations they are sensitive to in systematic ways because of the way these environments scaffold affective and cognitive processes, specifically those that concern the perception and treatment of ingroups and outgroups. We argue that gangs undermine reasons responsiveness to a greater extent than incel communities because gang members are more thoroughly immersed in the gang environment.

The concept of autonomy is indispensable in the history of Western thought. At least that’s how it seems to us nowadays. However, the notion has not always had the outstanding significance that we ascribe to it today and its exact meaning has also changed considerably over time. In this paper, we want to shed light on different understandings of autonomy and clearly distinguish them from each other. Our main aim is to contribute to conceptual clarity in (interdisciplinary) discourses and to point out possible pitfalls of conceptual pluralism.

Trustworthy medical AI requires transparency about the development and testing of underlying algorithms to identify biases and communicate potential risks of harm. Abundant guidance exists on how to achieve transparency for medical AI products, but it is unclear whether publicly available information adequately informs about their risks. To assess this, we retrieved public documentation on the 14 available CE-certified AI-based radiology products of the II b risk category in the EU from vendor websites, scientific publications, and the European EUDAMED database. Using a self-designed survey, we reported on their development, validation, ethical considerations, and deployment caveats, according to trustworthy AI guidelines. We scored each question with either 0, 0.5, or 1, to rate if the required information was “unavailable”, “partially available,” or “fully available.” The transparency of each product was calculated relative to all 55 questions. Transparency scores ranged from 6.4% to 60.9%, with a median of 29.1%. Major transparency gaps included missing documentation on training data, ethical considerations, and limitations for deployment. Ethical aspects like consent, safety monitoring, and GDPR-compliance were rarely documented. Furthermore, deployment caveats for different demographics and medical settings were scarce. In conclusion, public documentation of authorized medical AI products in Europe lacks sufficient public transparency to inform about safety and risks. We call on lawmakers and regulators to establish legally mandated requirements for public and substantive transparency to fulfill the promise of trustworthy AI for health.

The debate regarding prediction and explainability in artificial intelligence (AI) centers around the trade-off between achieving high-performance accurate models and the ability to understand and interpret the decisionmaking process of those models. In recent years, this debate has gained significant attention due to the increasing adoption of AI systems in various domains, including healthcare, finance, and criminal justice. While prediction and explainability are desirable goals in principle, the recent spread of high accuracy yet opaque machine learning (ML) algorithms has highlighted the trade-off between the two, marking this debate as an inter-disciplinary, inter-professional arena for negotiating expertise. There is no longer an agreement about what should be the “default” balance of prediction and explainability, with various positions reflecting claims for professional jurisdiction. Overall, there appears to be a growing schism between the regulatory and ethics-based call for explainability as a condition for trustworthy AI, and how it is being designed, assimilated, and negotiated. The impetus for writing this commentary comes from recent suggestions that explainability is overrated, including the argument that explainability is not guaranteed in human healthcare experts either. To shed light on this debate, its premises, and its recent twists, we provide an overview of key arguments representing different frames, focusing on AI in healthcare.

Position papers on artificial intelligence (AI) ethics are often framed as attempts to work out technical and regulatory strategies for attaining what is commonly called trustworthy AI. In such papers, the technical and regulatory strategies are frequently analyzed in detail, but the concept of trustworthy AI is not. As a result, it remains unclear. This paper lays out a variety of possible interpretations of the concept and concludes that none of them is appropriate. The central problem is that, by framing the ethics of AI in terms of trustworthiness, we reinforce unjustified anthropocentric assumptions that stand in the way of clear analysis. Furthermore, even if we insist on a purely epistemic interpretation of the concept, according to which trustworthiness just means measurable reliability, it turns out that the analysis will, nevertheless, suffer from a subtle form of anthropocentrism. The paper goes on to develop the concept of strange error, which serves both to sharpen the initial diagnosis of the inadequacy of trustworthy AI and to articulate the novel epistemological situation created by the use of AI. The paper concludes with a discussion of how strange error puts pressure on standard practices of assessing moral culpability, particularly in the context of medicine.

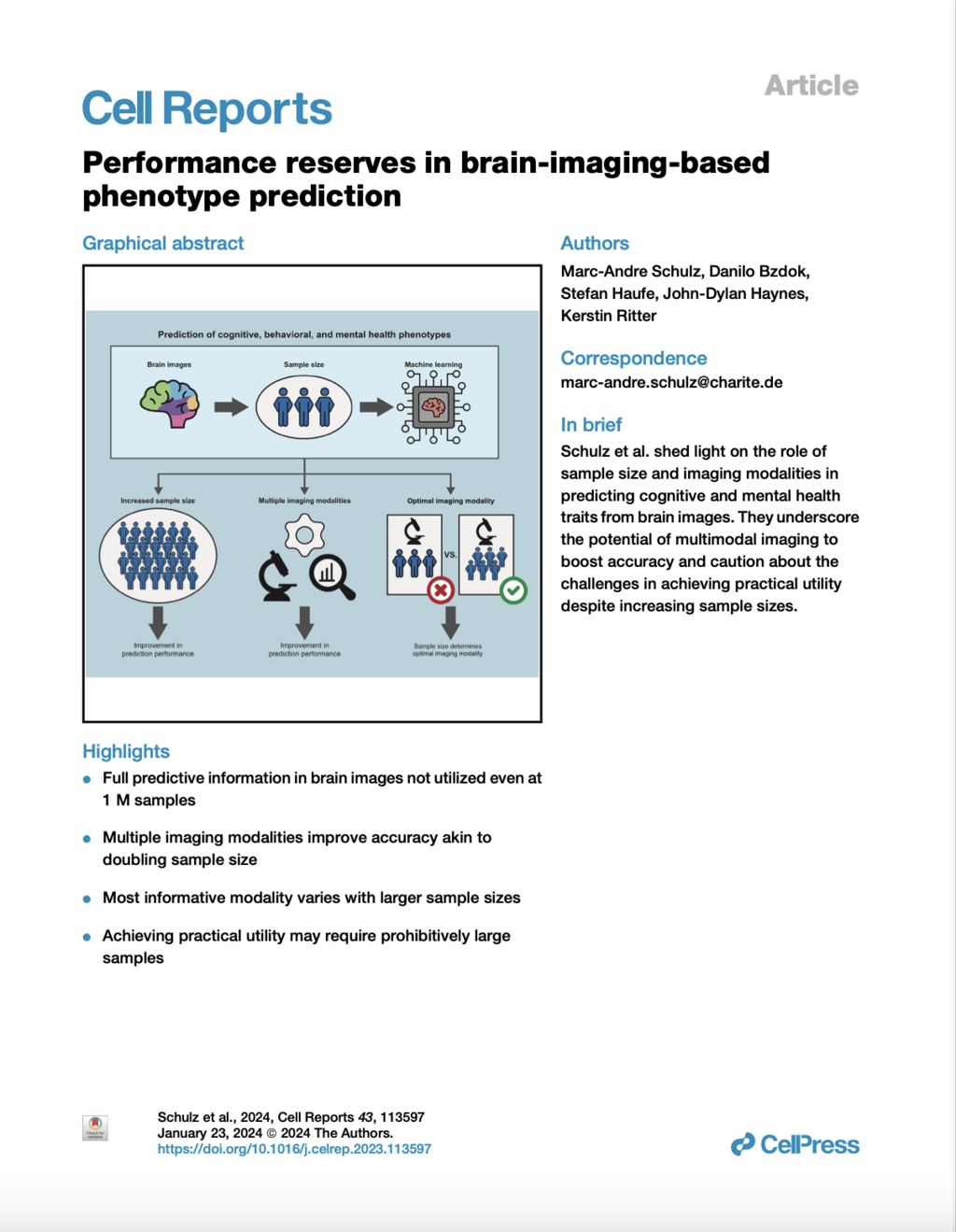

This study examines the impact of sample size on predicting cognitive and mental health phenotypes from brain imaging via machine learning. Our analysis shows a 3- to 9-fold improvement in prediction performance when sample size increases from 1,000 to 1 M participants. However, despite this increase, the data suggest that prediction accuracy remains worryingly low and far from fully exploiting the predictive potential of brain imaging data. Additionally, we find that integrating multiple imaging modalities boosts prediction accuracy, often equivalent to doubling the sample size. Interestingly, the most informative imaging modality often varied with increasing sample size, emphasizing the need to consider multiple modalities. Despite significant performance reserves for phenotype prediction, achieving substantial improvements may necessitate prohibitively large sample sizes, thus casting doubt on the practical or clinical utility of machine learning in some areas of neuroimaging.

Deep Learning (DL) has emerged as a powerful tool in neuroimaging research. DL models predicting brain pathologies, psychological behaviors, and cognitive traits from neuroimaging data have the potential to discover the neurobiological basis of these phenotypes. However, these models can be biased by spurious imaging artifacts or by the information about age and sex encoded in the neuroimaging data. In this study, we introduce a lightweight and easy-to-use framework called ‘DeepRepViz’ designed to detect such potential confounders in DL model predictions and enhance the transparency of predictive DL models. DeepRepViz comprises two components - an online visualization tool (available at https://deep-rep-viz.vercel.app/) and a metric called the ‘Con-score’. The tool enables researchers to visualize the final latent representation of their DL model and qualitatively inspect it for biases. The Con-score, or the ‘concept encoding’ score, quantifies the extent to which potential confounders like sex or age are encoded in the final latent representation and influences the model predictions. We illustrate the rationale of the Con-score formulation using a simulation experiment. Next, we demonstrate the utility of the DeepRepViz framework by applying it to three typical neuroimaging-based prediction tasks (n = 12000). These include (a) distinguishing chronic alcohol users from controls, (b) classifying sex, and (c) predicting the speed of completing a cognitive task known as ‘trail making’. In the DL model predicting chronic alcohol users, DeepRepViz uncovers a strong influence of sex on the predictions (Con-score = 0.35). In the model predicting cognitive task performance, DeepRepViz reveals that age plays a major role (Con-score = 0.3). Thus, the DeepRepViz framework enables neuroimaging researchers to systematically examine their model and identify potential biases, thereby improving the transparency of predictive DL models in neuroimaging studies.

Various forms of brain stimulation are used to treat different neurological and psychiatric diseases, as well as for research purposes. In legal discourse, the focus has so far been predominantly on deep brain stimulation. In contrast, there is a lack of discussion on methods of non-invasive brain stimulation. Against the backdrop of the legal framework changed by Regulation (EU) 2017/745, this article first provides a classification under medical device law. It then explains issues of information and consent. Additionally, it presents the legal aspects of using AI in the context of non-invasive and deep brain stimulation. Finally, it provides an outlook on the methods of neural optogenetics that are still under development.

The articles in this volume examine the extent to which current developments in the field of artificial intelligence (AI) are leading to new types of interaction processes and changing the relationship between humans and machines. First, new developments in AI-based technologies in various areas of application and development are presented. Subsequently, renowned experts discuss novel human-machine interactions from different disciplinary perspectives and question their social, ethical and epistemological implications. The volume sees itself as an interdisciplinary contribution to the sociopolitically pressing question of how current technological changes are altering human-machine relationships and what consequences this has for thinking about humans and technology.

Will neuroscientists soon be able to read minds thanks to brain imaging techniques? We cannot answer this question without knowing the state of the art in neuroimaging. But neither can we answer this question without having some understanding of the concept to which the term "mind reading" refers. This article is an attempt to develop such an understanding. Our analysis takes place in two stages. In the first stage, we provide a categorical explanation of mindreading. The categorical explanation formulates empirical conditions that must be met for mindreading to be possible. In the second phase, we develop a benchmark for assessing the performance of mindreading experiments. The resulting conceptualization of mindreading helps to reconcile folk psychological judgments about what mindreading must entail with the constraints imposed by empirical strategies for achieving it.

Predicting brain age is a relatively new tool in neuromedicine and neuroscience. It is widely used in research and clinical practice as a marker for biological age, for the general health of the brain and as an indicator of various brain-related disorders. Its usefulness in all these tasks depends on detecting outliers and thus not correctly predicting chronological age. The indicative value of age prediction comes from the gap between the chronological age of a brain and the predicted age, the "Brain Age Gap" (BAG). This article shows how the clinical and scientific application of brain age prediction tacitly pathologizes the conditions it addresses. It is argued that the tacit nature of this transformation obscures the need for its explicit justification.

The rise of neurotechnologies, especially in combination with artificial intelligence (AI)-based methods for brain data analytics, has given rise to concerns around the protection of mental privacy, mental integrity and cognitive liberty – often framed as “neurorights” in ethical, legal, and policy discussions. Several states are now looking at including neurorights into their constitutional legal frameworks, and international institutions and organizations, such as UNESCO and the Council of Europe, are taking an active interest in developing international policy and governance guidelines on this issue. However, in many discussions of neurorights the philosophical assumptions, ethical frames of reference and legal interpretation are either not made explicit or conflict with each other. The aim of this multidisciplinary work is to provide conceptual, ethical, and legal foundations that allow for facilitating a common minimalist conceptual understanding of mental privacy, mental integrity, and cognitive liberty to facilitate scholarly, legal, and policy discussions.

Researchers in applied ethics, and particularly in some areas of bioethics, aim to develop concrete and appropriate recommendations for action in morally relevant situations in the real world. However, in moving from more abstract levels of ethical reasoning to such concrete recommendations, it seems possible to develop divergent or even contradictory recommendations for action in a given situation, even in relation to the same normative principle or norm. This may give the impression that such recommendations are arbitrary and thus not well founded. Against this background, we first want to show that ethical recommendations for action, while contingent to some extent, are not arbitrary if they are developed in an appropriate way. To this end, we examine two types of contingencies that arise in applied ethical reasoning using recent examples of recommendations for action in the context of the COVID-19 pandemic. In doing so, we refer to a three-stage model of ethical reasoning for recommendations for action. However, this leaves open the question of how applied ethics can deal with contingent recommendations for action. Therefore, in a second step, we analyze the role of bridging principles for the development of ethically appropriate recommendations for action, i.e., principles that combine normative claims with relevant empirical information to justify certain recommendations for action in a given morally relevant situation. Finally, we discuss some implications for reasoning and reporting in empirically informed ethics.

Researchers in applied ethics, and particularly in some areas of bioethics, aim to develop concrete and appropriate recommendations for action in morally relevant situations in the real world. However, in moving from more abstract levels of ethical reasoning to such concrete recommendations, it seems possible to develop divergent or even contradictory recommendations for action in a given situation, even in relation to the same normative principle or norm. This may give the impression that such recommendations are arbitrary and thus not well founded. Against this background, we first want to show that ethical recommendations for action, while contingent to some extent, are not arbitrary if they are developed in an appropriate way. To this end, we examine two types of contingencies that arise in applied ethical reasoning using recent examples of recommendations for action in the context of the COVID-19 pandemic. In doing so, we refer to a three-stage model of ethical reasoning for recommendations for action. However, this leaves open the question of how applied ethics can deal with contingent recommendations for action. Therefore, in a second step, we analyze the role of bridging principles for the development of ethically appropriate recommendations for action, i.e., principles that combine normative claims with relevant empirical information to justify certain recommendations for action in a given morally relevant situation. Finally, we discuss some implications for reasoning and reporting in empirically informed ethics.

This article critically addresses the conceptualization of trust in the ethical discussion on artificial intelligence (AI) in the specific context of social robots in care. First, we attempt to define in which respect we can speak of ‘social’ robots and how their ‘social affordances’ affect the human propensity to trust in human–robot interaction. Against this background, we examine the use of the concept of ‘trust’ and ‘trustworthiness’ with respect to the guidelines and recommendations of the High-Level Expert Group on AI of the European Union.

In the context of rapid digitalisation and the emergence of consumer health technologies, neurotechnological devices are associated with further hopes, but also with major ethical and legal concerns.

Machine learning (ML) is increasingly used to predict clinical deterioration in intensive care unit (ICU) patients through scoring systems. Although promising, such algorithms often overfit their training cohort and perform worse at new hospitals. Thus, external validation is a critical – but frequently overlooked – step to establish the reliability of predicted risk scores to translate them into clinical practice. We systematically reviewed how regularly external validation of ML-based risk scores is performed and how their performance changed in external data.

This article is a preprint and has not been peer-reviewed. It reports new medical research that has yet to be evaluated and so should not be used to guide clinical practice.

Artificial intelligence (AI) applications are finding their way into our everyday lives. In the field of medicine, this involves the optimisation of diagnosis and therapy, the use of health apps of all kinds, the provision of personalised medicine tools, the possibilities of robot-assisted surgery, optimised hospital data management and the development and provision of intelligent medical products. But are these constellations actually examples of artificial intelligence? What does ‘intelligence’ mean and what conditions must be met in order to be able to speak of ‘intelligence’? These questions are answered very differently depending on the context and disciplinary anchoring, resulting in a wide-ranging and multi-faceted discourse. What is undisputed, however, is that the quality and quantity of the applications categorised as AI raise numerous legal, ethical, social and scientific questions that have yet to be comprehensively addressed and satisfactorily resolved. While the law usually lags behind practical developments when it comes to regulating technology, a paradigm shift is taking place in the field of AI: at the end of April 2021, the European Commission presented a draft ‘Regulation laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain Union acts’ 2 (hereinafter referred to as the ‘VO-E’). The following explanations present the regulatory approach on which this draft is based as well as the resulting central definitions, duties and mechanisms. In the interest of a holistic approach, ethical, medical-scientific and social considerations are also taken into account.

Probably nowhere are technology and people so close, so intimately and intimately intertwined as in the fields of medicine, therapy and care. Numerous ethical questions are therefore raised at the nexus of medicine and technology. This volume pursues the dual aim of illuminating the entanglements of medicine, technology and ethics from different disciplinary perspectives on the one hand and, on the other, taking a look at practice, at the realms of experience of people working in medicine and their interactions with technologies.

Introduction

Although machine learning classifiers have been frequently used to detect Alzheimer’s disease (AD) based on structural brain MRI data, potential bias with respect to sex and age has not yet been addressed. Here, we examine a state-of-the-art AD classifier for potential sex and age bias even in the case of balanced training data.

Methods

Based on an age- and sex-balanced cohort of 432 subjects (306 healthy controls, 126 subjects with AD) extracted from the ADNI data base, we trained a convolutional neural network to detect AD in MRI brain scans and performed ten different random training-validation-test splits to increase robustness of the results. Classifier decisions for single subjects were explained using layer-wise relevance propagation.

Results

The classifier performed significantly better for women (balanced accuracy ) than for men (). No significant differences were found in clinical AD scores, ruling out a disparity in disease severity as a cause for the performance difference. Analysis of the explanations revealed a larger variance in regional brain areas for male subjects compared to female subjects.

Discussion

The identified sex differences cannot be attributed to an imbalanced training dataset and therefore point to the importance of examining and reporting classifier performance across population subgroups to increase transparency and algorithmic fairness. Collecting more data especially among underrepresented subgroups and balancing the dataset are important but do not always guarantee a fair outcome.

Background:

Resources are increasingly spent on artificial intelligence (AI) solutions for medical applications aiming to improve diagnosis, treatment, and prevention of diseases. While the need for transparency and reduction of bias in data and algorithm development has been addressed in past studies, little is known about the knowledge and perception of bias among AI developers.

Objective:

This study’s objective was to survey AI specialists in health care to investigate developers’ perceptions of bias in AI algorithms for health care applications and their awareness and use of preventative measures.

Background: Accurate prediction of clinical outcomes in individual patients following acute stroke is vital for healthcare providers to optimize treatment strategies and plan further patient care. Here, we use advanced machine learning (ML) techniques to systematically compare the prediction of functional recovery, cognitive function, depression, and mortality of first-ever ischemic stroke patients and to identify the leading prognostic factors.

The use of artificial intelligence (AI), i.e. algorithmic systems that can solve complex problems independently, will foreseeably play an important role in clinical and nursing practice. In clinical practice, AI systems are already in use to support diagnostic and therapeutic decisions or to work prognostically. For several years now, systems for diagnostic support in radiology have been tested and discussed (Wu et al. 2020; McKinney et al. 2020). This is accompanied by the hope of faster, more efficient and more effective diagnoses and decisions as well as new therapy and prognosis options, especially in big data-based medicine (i.e. in medical areas that rely on the processing of large amounts of complex and unstructured data). In nursing practice, AI plays a role primarily in connection with the use of nursing robots such as Paro or Pepper, which are intended to independently animate people in need of care, communicate with them or take over certain nursing tasks (Bendel 2018).

These expectations are offset by a number of ethical concerns, for example regarding the possible effects of an increasing use of AI-based systems on the medical and nursing role or the doctor-patient relationship. In addition, questions of data protection are discussed as well as possible social implications. Another focus of the debate is the possible ethical implications of the lack of explainability or explicability of diagnoses, therapeutic decisions and prognoses by AI-based systems and their significance for patients or the interaction between humans and medical technology in general. With regard to the use of care robots, the focus of the debates is on the conditions under which such systems can be considered trustworthy or what an appropriate use for people in need of care might look like.

The expectations outlined regarding the use of AI-based systems in medicine and nursing, as well as in particular the associated ethical challenges, also raise a number of legal questions. Of particular importance are questions of civil liability and criminal responsibility in the context of the use of AI-based systems. Insofar as an applied ethical reflection of new technological developments must fundamentally also take into account the applicable legal framework and the gaps and challenges that arise therein, an examination of these questions also seems necessary with a view to ethical analysis.

Progress in neurotechnology and Artificial Intelligence (AI) provides unprecedented insights into the human brain. There are increasing possibilities to influence and measure brain activity. These developments raise multifaceted ethical and legal questions. The proponents of neurorights argue in favour of introducing new human rights to protect mental processes and brain data. This article discusses the necessity and advantages of introducing new human rights focusing on the proposed new human right to mental self-determination and the right to freedom of thought as enshrined in Art.18 International Covenant on Civil and Political Rights (ICCPR) and Art. 9 European Convention on Human Rights (ECHR). I argue that the right to freedom of thought can be coherently interpreted as providing comprehensive protection of mental processes and brain data, thus offering a normative basis regarding the use of neurotechnologies. Besides, I claim that an evolving interpretation of the right to freedom of thought is more convincing than introducing a new human right to mental self-determination.

Neuronal optogenetics is a technique to control the activity of neurons with light. This is achieved by artificial expression of light-sensitive ion channels in the target cells. By optogenetic methods, cells that ate naturally light-insensitive can be made photosensitive and addressable by illumination and precisely controllable in time and space. So far, optogenetics has primarily been a basic research tool to better understand the brain. However, initial studies are already investigating the possibility of using optogenetics in humans for future therapeutic approaches for neuronal based diseases such as Parkinson's disease, epilepsy, or to promote stroke recovery. In addition, optogenetic methods have already been successfully applied to a human in an experimental setting. Neuronal optogenetics also raises ethical and legal issues, e.g., in relation to animal experiments and its application in humans. Additional ethical and legal questions may arise when optogenetic methods are investigated on cerebral organoids. Thus, for the successful translation of optogenetics from basic research to medical practice, the ethical and legal questions of this technology must also be answered, because open ethical and legal questions can hamper the translation. The paper provides an overview of the ethical and legal issues raised by neuronal optogenetics. In addition, considering the technical prerequisites for translation, the paper shows consistent approaches to address these open questions. The paper also aims to support the interdisciplinary dialogue between scientists and physicians on the one hand, and ethicists and lawyers on the other, to enable an interdisciplinary coordinated realization of neuronal optogenetics.

The increasing availability of brain data within and outside the biomedical field, combined with the application of artificial intelligence (AI) to brain data analysis, poses a challenge for ethics and governance. We highlight the particular ethical implications of brain data collection and processing and outline a multi-level governance framework. This framework aims to maximize the benefits of facilitating the collection and further processing of brain data for science and medicine while minimizing the risks and preventing harmful use. The framework consists of four primary areas for regulatory intervention: binding regulation, ethics and soft law, responsible innovation and human rights.

In the past decade, artificial intelligence (AI) has become a disruptive force around the world, offering enormous potential for innovation but also creating hazards and risks for individuals and the societies in which they live. This volume addresses the most pressing philosophical, ethical, legal, and societal challenges posed by AI. Contributors from different disciplines and sectors explore the foundational and normative aspects of responsible AI and provide a basis for a transdisciplinary approach to responsible AI. This work, which is designed to foster future discussions to develop proportional approaches to AI governance, will enable scholars, scientists, and other actors to identify normative frameworks for AI to allow societies, states, and the international community to unlock the potential for responsible innovation in this critical field. This book is also available as Open Access on Cambridge Core.

In this chapter, Philipp Kellmeyer discusses how to protect mental privacy and mental integrity in the interaction of AI-based neurotechnology from the perspective of philosophy, ethics, neuroscience, and psychology. The author argues that mental privacy and integrity are important anthropological goods that need to be protected from unjustified interferences. He then outlines the current scholarly discussion and policy initiatives about neurorights and takes the position that while existing human rights provide sufficient legal instruments, an approach is required that makes these rights actionable and justiciable to protect mental privacy and mental integrity, for example, by connecting fundamental rights to specific applied laws.

Currently, the epistemic quality of algorithms and their normative implications are particularly in focus. While general questions of justice have been addressed in this context, specific questions of epistemic (in)justice have so far been neglected. We aim to fill this gap by analyzing some potential implications of behaviorally intelligent neurotechnology (B-INT). We argue that B-INT has a number of epistemic features that carry the potential for certain epistemic problems, which in turn are likely to lead to instances of epistemic injustice. To substantiate this claim, we will first introduce and explain the terminology and technology behind B-INT. Second, we will present four fictional scenarios for the use of B-INT and highlight a number of epistemic problems that could arise. Third, we will discuss their relationship to the concept of epistemic justice and possible examples of this. Thus, we will highlight some important and morally relevant implications of the epistemic properties of INT

Neuroenhancement is about improving a person's mental characteristics, abilities and performance. The various techniques of neuroenhancement offer new possibilities for improvement, but also harbour significant dangers. Neuroenhancement, therefore, poses both comprehensive normative challenges for individuals and society as a whole. This status report provides a concise overview of the current neuroenhancement debate. The definition, techniques and purposes of neuroenhancement are discussed and arguments for and against its use are analysed on a individual, interpersonal and socio-political level.

Technological self-optimisation is currently talked about everywhere. It includes research into new possibilities with regard to cosmetic surgery, functional implantology, brain doping and extending lifespan. There are considerable social reservations about many of these technical means, which are often not legally available. In their ethical assessment, Jan-Hendrik Heinrichs and Markus Rüther argue in favour of a differentiated perspective: in their opinion, the reservations are not suitable for justifying social ostracism or even binding bans for everyone. Rather, the freedom to organise oneself has priority, but this does not mean that there do not have to be clear rules for some areas. However, because self-determination can only be free if it is informed, the authors argue in favour of regulations that are determined by extensive information obligations rather than prohibitions. From an individual perspective, a series of moral recommendations can also be formulated which, although they cannot be enforced, provide an ethical compass to guide us through the thicket of ethical debate.

Neuroimaging can be used to collect a wide range of information about processes in the human brain. This status report provides information on the basic principles and possible applications of neuroimaging techniques in the neurosciences, examines the relevant legal standards and regulations and discusses the ethical challenges that arise in relation to the use of imaging techniques in the context of medical treatment and clinical research as well as in the context of criminal law.

In particular, the risk-benefit balance, the autonomy of patients, the protection of their privacy and more general considerations of justice in clinical research are taken into account.

Explainability for artificial intelligence (AI) in medicine is a hotly debated topic. Our paper presents a review of the key arguments in favor and against explainability for AI-powered Clinical Decision Support System (CDSS) applied to a concrete use case, namely an AI-powered CDSS currently used in the emergency call setting to identify patients with life-threatening cardiac arrest. More specifically, we performed a normative analysis using socio-technical scenarios to provide a nuanced account of the role of explainability for CDSSs for the concrete use case, allowing for abstractions to a more general level. Our analysis focused on three layers: technical considerations, human factors, and the designated system role in decision-making. Our findings suggest that whether explainability can provide added value to CDSS depends on several key questions: technical feasibility, the level of validation in case of explainable algorithms, the characteristics of the context in which the system is implemented, the designated role in the decision-making process, and the key user group(s). Thus, each CDSS will require an individualized assessment of explainability needs and we provide an example of how such an assessment could look like in practice.

Will brain imaging technology soon enable neuroscientists to read minds? We cannot answer this question without some understanding of the state of the art in neuroimaging. But neither can we answer this question without some understanding of the concept invoked by the term “mind reading.” This article is an attempt to develop such understanding. Our analysis proceeds in two stages. In the first stage, we provide a categorical explication of mind reading. The categorical explication articulates empirical conditions that must be satisfied if mind reading is to be achieved. In the second stage, we develop a metric for judging the proficiency of mind reading experiments. The conception of mind reading that emerges helps to reconcile folk psychological judgments about what mind reading must involve with the constraints imposed by empirical strategies for achieving it.

In this paper, we address the question of whether AI should be used for suicide prevention on social media data. We focus on algorithms that can identify persons with suicidal ideation based on their postings on social media platforms and investigate whether private companies like Facebook are justified in using these. To find out if that is the case, we start with providing two examples for AI-based means of suicide prevention in social media. Subsequently, we frame suicide prevention as an issue of beneficence, develop two fictional cases to explore the scope of the principle of beneficence and apply the lessons learned to Facebook’s employment of AI for suicide prevention. We show that Facebook is neither acting under an obligation of beneficence nor acting meritoriously. This insight leads us to the general question of who is entitled to help. We conclude that private companies like Facebook can play an important role in suicide prevention, if they comply with specific rules which we derive from beneficence and autonomy as core principles of biomedical ethics. At the same time, public bodies have an obligation to create appropriate framework conditions for AI-based tools of suicide prevention. As an outlook we depict how cooperation between public and private institutions can make an important contribution to combating suicide and, in this way, put the principle of beneficence into practice.

In this paper, I examine whether the use of artificial intelligence (AI) and automated decision-making (ADM) aggravates issues of discrimination as has been argued by several authors. For this purpose, I first take up the lively philosophical debate on discrimination and present my own definition of the concept. Equipped with this account, I subsequently review some of the recent literature on the use AI/ADM and discrimination. I explain how my account of discrimination helps to understand that the general claim in view of the aggravation of discrimination is unwarranted. Finally, I argue that the use of AI/ADM can, in fact, increase issues of discrimination, but in a different way than most critics assume: it is due to its epistemic opacity that AI/ADM threatens to undermine our moral deliberation which is essential for reaching a common understanding of what should count as discrimination. As a consequence, it turns out that algorithms may actually help to detect hidden forms of discrimination.

Alcohol misuse during adolescence (AAM) has been associated with disruptive development of adolescent brains. In this longitudinal machine learning (ML) study, we could predict AAM significantly from brain structure (T1-weighted imaging and DTI) with accuracies of 73 -78% in the IMAGEN dataset (n∼1182). Our results not only show that structural differences in brain can predict AAM, but also suggests that such differences might precede AAM behavior in the data. We predicted 10 phenotypes of AAM at age 22 using brain MRI features at ages 14, 19, and 22. Binge drinking was found to be the most predictable phenotype. The most informative brain features were located in the ventricular CSF, and in white matter tracts of the corpus callosum, internal capsule, and brain stem. In the cortex, they were spread across the occipital, frontal, and temporal lobes and in the cingulate cortex. We also experimented with four different ML models and several confound control techniques. Support Vector Machine (SVM) with rbf kernel and Gradient Boosting consistently performed better than the linear models, linear SVM and Logistic Regression. Our study also demonstrates how the choice of the predicted phenotype, ML model, and confound correction technique are all crucial decisions in an explorative ML study analyzing psychiatric disorders with small effect sizes such as AAM.

Predicting relapse for individuals with psychotic disorders is not well established, especially after discontinuation of antipsychotic treatment. We aimed to identify general prognostic factors of relapse for all participants (irrespective of treatment continuation or discontinuation) and specific predictors of relapse for treatment discontinuation, using machine learning.

Deep learning requires large labeled datasets that are difficult to gather in medical imaging due to data privacy issues and time-consuming manual labeling. Generative Adversarial Networks (GANs) can alleviate these challenges enabling synthesis of shareable data. While 2D GANs have been used to generate 2D images with their corresponding labels, they cannot capture the volumetric information of 3D medical imaging. 3D GANs are more suitable for this and have been used to generate 3D volumes but not their corresponding labels. One reason might be that synthesizing 3D volumes is challenging owing to computational limitations. In this work, we present 3D GANs for the generation of 3D medical image volumes with corresponding labels applying mixed precision to alleviate computational constraints.

We generated 3D Time-of-Flight Magnetic Resonance Angiography (TOF-MRA) patches with their corresponding brain blood vessel segmentation labels. We used four variants of 3D Wasserstein GAN (WGAN) with: 1) gradient penalty (GP), 2) GP with spectral normalization (SN), 3) SN with mixed precision (SN-MP), and 4) SN-MP with double filters per layer (c-SN-MP). The generated patches were quantitatively evaluated using the Fréchet Inception Distance (FID) and Precision and Recall of Distributions (PRD). Further, 3D U-Nets were trained with patch-label pairs from different WGAN models and their performance was compared to the performance of a benchmark U-Net trained on real data. The segmentation performance of all U-Net models was assessed using Dice Similarity Coefficient (DSC) and balanced Average Hausdorff Distance (bAVD) for a) all vessels, and b) intracranial vessels only.

Our results show that patches generated with WGAN models using mixed precision (SN-MP and c-SN-MP) yielded the lowest FID scores and the best PRD curves. Among the 3D U-Nets trained with synthetic patch-label pairs, c-SN-MP pairs achieved the highest DSC (0.841) and lowest bAVD (0.508) compared to the benchmark U-Net trained on real data (DSC 0.901; bAVD 0.294) for intracranial vessels.

In conclusion, our solution generates realistic 3D TOF-MRA patches and labels for brain vessel segmentation. We demonstrate the benefit of using mixed precision for computational efficiency resulting in the best-performing GAN-architecture. Our work paves the way towards sharing of labeled 3D medical data which would increase generalizability of deep learning models for clinical use.

In the present article we examine the anthropological implications of “intelligent” neurotechnologies (INTs). For this purpose, we first give an introduction to current developments of INTs by specifying their central characteristics. We then present and discuss traditional anthropological concepts such as the “homo faber,” the concept of humans as “deficient beings,” and the concept of the “cyborg,” questioning their descriptive relevance regarding current neurotechnological applications. To this end, we relate these anthropological concepts to the characteristics of INTs elaborated before. As we show, the explanatory validity of the anthropological concepts analyzed in this article vary significantly. While the concept of the homo faber, for instance, is not capable of adequately describing the anthropological implications of new INTs, the cyborg proves to be capable of grasping several aspects of today’s neurotechnologies. Nevertheless, alternative explanatory models are needed in order to capture the new characteristics of INTs in their full complexity.

Ein wesentlicher Bestandteil der Mensch-Maschine-Interaktion (MMI) ist der Informationsaustausch zwischen Menschen und Maschinen, um bestimmte Effekte in der Welt oder in den interagierenden Maschinen und/oder Menschen zu erzielen. Ein solcher Informationsaustausch in der MMI kann jedoch auch die Überzeugungen, Normen und Werte der beteiligten Menschen prägen. Somit kann er letztlich nicht nur individuelle, sondern auch gesellschaftliche Werte prägen. Dieser Artikel beschreibt einige Entwicklungslinien der MMI, in denen sich bereits erhebliche Werteänderungen abzeichnen. Zu diesem Zweck führen wir den allgemeinen Begriff der eValuation ein, der als Ausgangspunkt für die Ausarbeitung dreier spezifischer Formen der Werteänderung dient, nämlich deValuation, reValuation und xValuation. Wir erklären diese zusammen mit Beispielen für Self-Tracking-Praktiken und den Einsatz sozialer Roboter.

Follow us on LinkedIn and Instagram to receive the latest information, exciting updates, and exclusive insights from the world of neuroethics.